Level zero: my thoughts on Global Responsible AI Initiative launch event

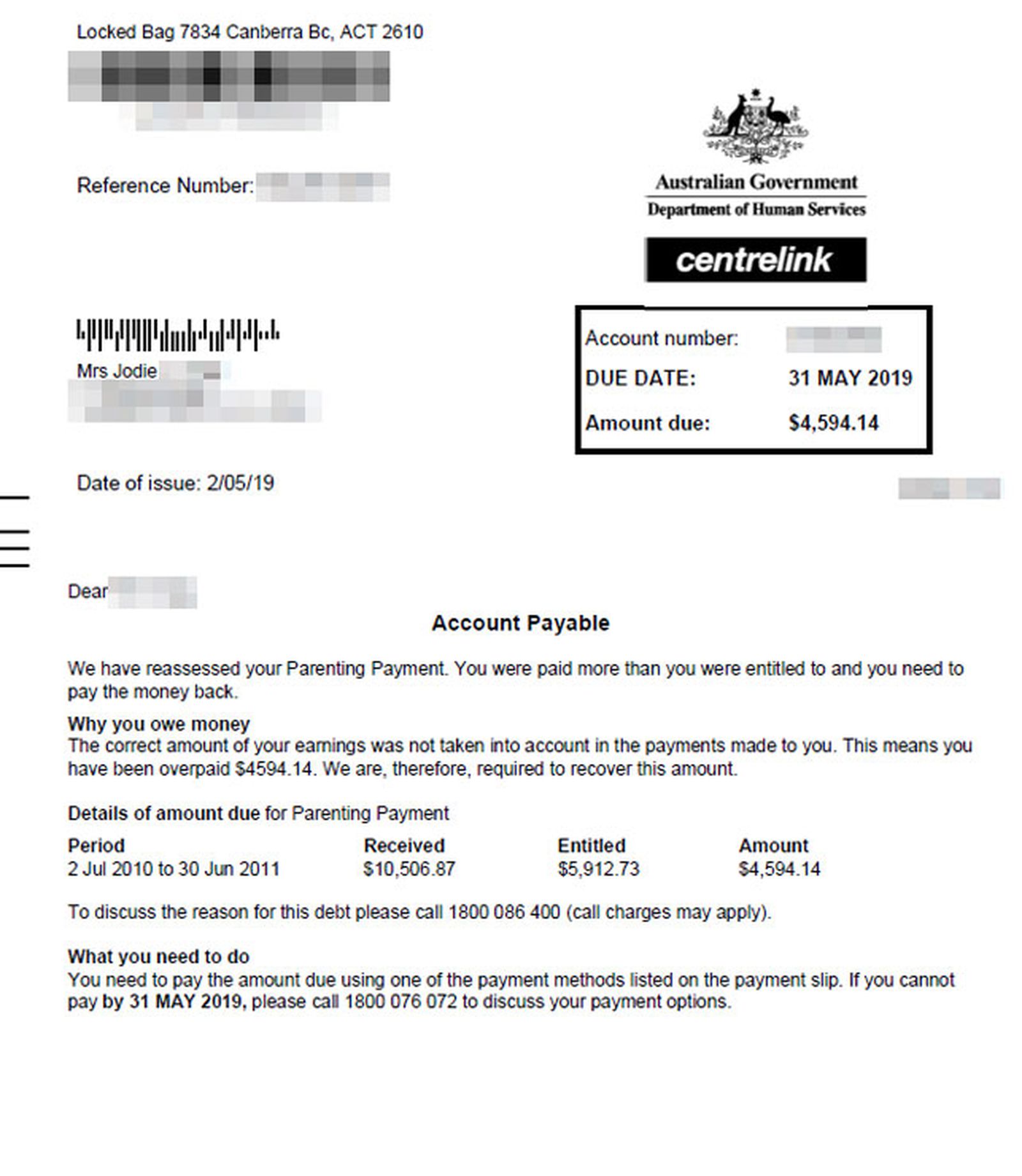

I wonder if the Robodebt report recently published in Australia added to the general public's perception of AI, their fears and concerns. To my Europe-based readers who may not be familiar with the Robodebt scheme and the related scandal: put simply, Australia's government social security agency was tasked with creating an automated system to improve welfare fraud and non-compliance detection. The developed algorithm was based on data-matching, with no or little human involvement, and the outputs were blindly trusted. Welfare recipients' reported income was compared with average income data from the tax office; discrepancies triggered an overpayment alert and a letter saying "it turns out you were paid more that you were entitled to, now you have to pay us back (a lot)". The total amount of debts raised against some 400 thousand people across the country exceeded one billion Australian dollars, and the outputs of the unfair automated system had not been questioned - until it was too late.

At the launch of the Global Responsible AI Initiative that I attended last night at Queensland AI Hub, the optimism of the panelists and keynote speakers was quite remarkable, especially given the media's (and general public's) concerns and the recent statements of several prominent AI scholars and developers (who are now labelled "AI doomers" as they focus on the AI threats, including the existential ones).

The reasons to be optimistic, as expressed by the panelists, varied: AI can benefit our society by, for example, providing personalised learning opportunities to people from different paths of life to help them realise their goals, or solving Australia's labour shortage problem in a few years when, due to the falling birth rates, there will be too few people to do work. There was a consensus among the panelists: banning AI use is not a wise solution; instead, regulations should be developed, and efforts must be made to educate people on the ethical and responsible AI applications. Ryan Prosser, CTO of FloodMapp, added that one crucial aspect of developing AI regulations is the alignment of incentives (think superalignment).

There was one question from the audience that sparked a particularly interesting discussion: "Who are we building these tools for?" Young people often seem to be confused and sometimes even disturbed by the whole social media and AI thing. "Are we sure", the audience member asked, "they even want it for their future?"

Toni Peggrem, Executive Manager of QLD AI Hub, replied with a quote from a recent interview with Elon Musk where he was invited to give some advice to the young people on how to choose a career given all the AI-related uncertainty. He replied, after a long pause,

"that is a tough question to answer, I guess I would just say to follow their heart in terms of what they find interesting to do or fulfilling to do, and try to be as useful as possible to the rest of society <...>".

Musk agreed that finding meaning in life where AI can do your job better than you may be difficult. "If I think about it too hard", he added, "it frankly can be dispiriting and demotivating". Eventually, the younger generation will have to make their own decisions on how they want to shape the world they inherit from us, Toni added, instead of passively accepting it as it is. As the conversation moved to the future of work - the topic of the summer school we at Zortify are hosting this coming August in Luxembourg - Chris McLaren, Chief Customer and Digital Officer from Queensland Government, drew the audience's attention to the fact that while some jobs may disappear, new, previously unknown jobs will be created. The whole concept of work is changing, and we have no choice other than to embrace this change and adapt.

Many industries are already adapting: for example, there is a new parliamentary inquiry into the use of generative artificial intelligence in the Australian education system where anyone interested in the topic can make a submission related to the impact GenAI tools will have on teaching and assessment, the role of educators, the safety and ethics of using GenAI in education and research, access to GenAI for kids from disadvantaged families, among other topics. Moreover, there are now good resources for educators using AI in classrooms. It looks like even before regulations catch up with the rapid development of AI, professional communities are creating their own bubbles of AI users, supporting each other - for example, as a translator, I have recently seen quite a few posts in the online translation communities on how to use chatGPT in our translation practice in a responsible and ethical way - it is indeed great to see the rise of such initiatives. Richi Nayak, an expert in data mining, text mining and web intelligence and a QUT professor, argued that instead of fighting students' use of GenAI for completing their assignments, we should teach them to use it in a smart and ethical way to enhance their learning - and Chris mentioned that Japan is already doing it by introducing AI tools into national school curriculums.

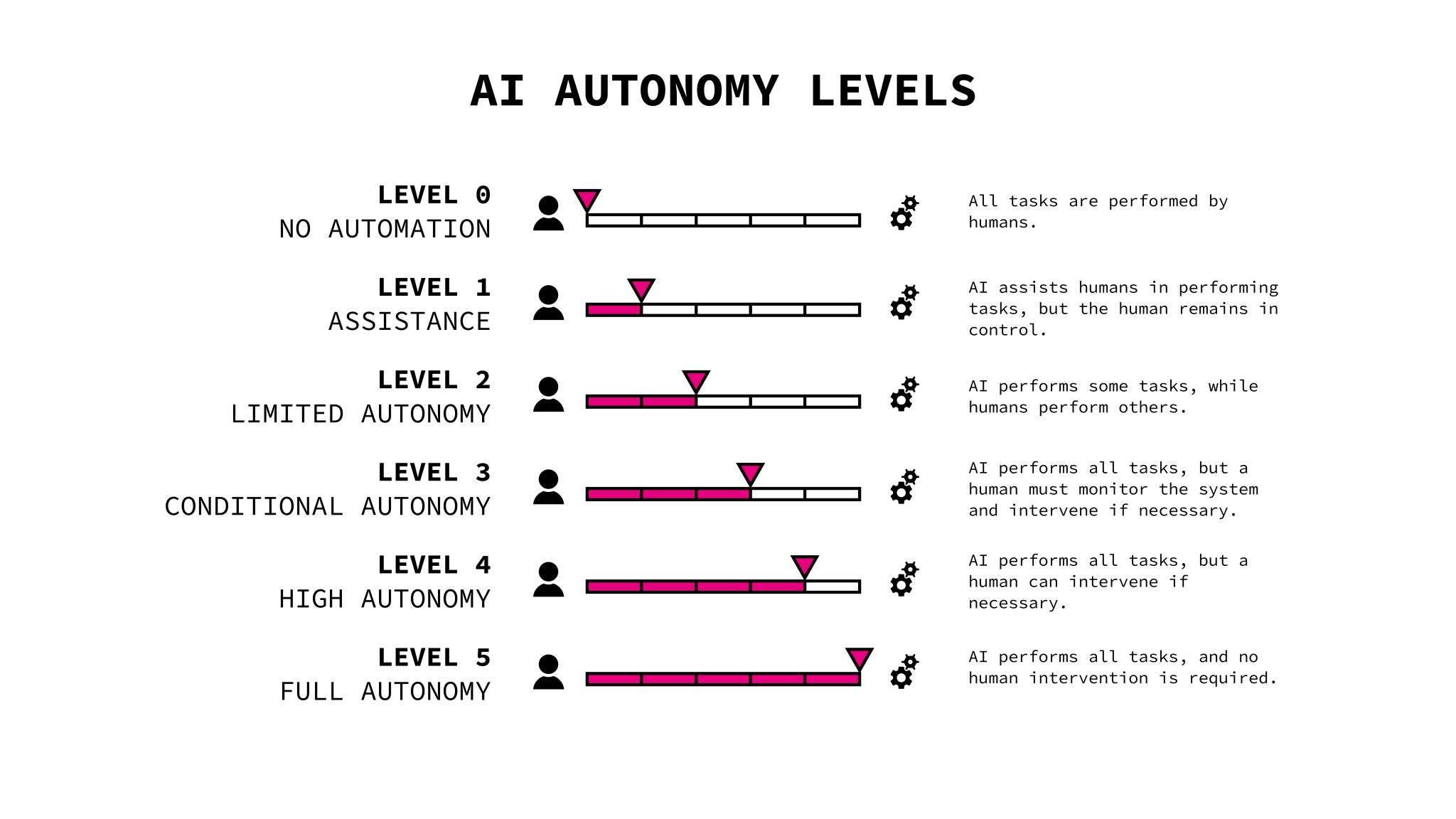

The event finished with a series of keynote speeches which covered history, opportunities and risks of generative AI from different standpoints. Marek Kowalkiewicz, Professor and Chair in Digital Economy at QUT, discussed the illusion of confidence GenAI tools often give us and suggested that the outputs of such tools should be labelled in a way similar to the autonomous car labelling, in accordance to the level of AI involvement. In his recent post, he writes:

"I often draw parallels with self-driving cars. There are varying degrees of autonomy, from fully manual to completely driverless. Interestingly, the most reliable instances fall in the middle, where human drivers remain in control while algorithms assist in navigating the road. Why shouldn't AI algorithms elsewhere be treated similarly?"

I found this particular idea very interesting. Some years ago - pre-GenAI - I attended a winter school on the ethics of autonomous vehicles, and we did talk about the possibility of using a switch on your car: which setting would you prefer - more self-driving (more car's autonomy) where you completely trust the AI's decisions (are you sure they align with your values though?) or more human involvement (but how confident are you in your driving capabilities and - more importantly - in your moral judgements in a stressful trolley-dilemma-type of situation?). When GenAI is used to create content that may impact our lives, would not it be good to have such a selector and a corresponding label attached to the generated content to show the recipient of the content who is responsible for its creation and the impacts it makes, and leave it to the recipient to decide how much they can trust this content?

Olivier Salvado, in turn, talked about AI-generated art, contemplating on what direction it may take next: perhaps, we will soon see 3D avatars replacing real actors in theatres, or the plot will be adapting to the audience on the go.

I am now reading a book about the making of MONA (Museum of Old and New Art in Hobart, Australia): this museum - which is known globally as an institution that breaks the laws of the art world - has definitely changed the way many perceive artworks and museums these day, and I can't help but wonder if GenAI will be a catalyst of a similar perception change. Will AI-generated art be a new norm one day? How will artists adapt, and will the audience be flexible enough to truly appreciate it?

Finally, Jason Lowe shared some Oracle tech updates - and some free tools like LiveLabs, for example - something to explore for sure.

Summary (not AI-generated): it was a great event, fantastic panel and speakers. I wanted to say something like "Let's see what the future brings", but in fact, I think we all, regardless of our background, should be actively engaging with these developments, learning about it as much as we can, expressing our opinions, and supporting each other in adapting to the changing technological landscape.

Regenerate response.

Just kidding - the thoughts are all mine, Level 0.

Member discussion